FAQs¶

This is a growing set of technical FAQs. The product FAQs on the Kedro website explain how Kedro can answer the typical use cases and requirements of data scientists, data engineers, machine learning engineers and product owners.

Installing Kedro¶

How can I check the version of Kedro installed? To check the version installed, type

kedro -Vin your terminal window.

Kedro documentation¶

Working with Notebooks¶

Kedro project development¶

Configuration¶

How do I change the setting for a configuration source folder?

How do I change the configuration source folder at run time?

How do I change the default overriding configuration environment?

Advanced topics¶

Nodes and pipelines¶

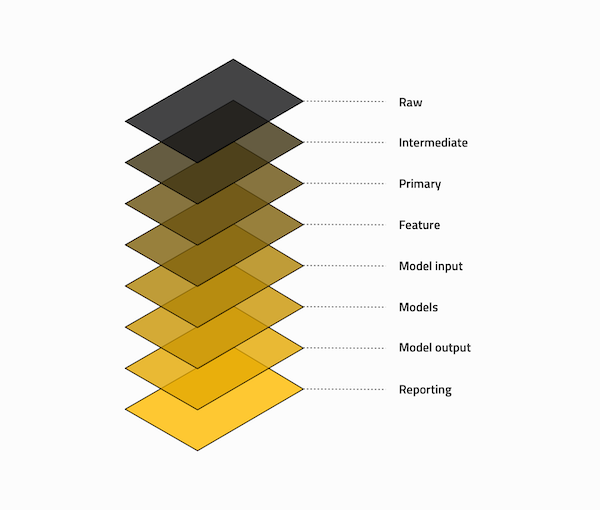

What is data engineering convention?¶

Bruce Philp and Guilherme Braccialli are the brains behind a layered data-engineering convention as a model of managing data. You can find an in-depth walk through of their convention as a blog post on Medium.

Refer to the following table below for a high level guide to each layer’s purpose

Note:The data layers don’t have to exist locally in the

datafolder within your project, but we recommend that you structure your S3 buckets or other data stores in a similar way.

Folder in data |

Description |

|---|---|

Raw |

Initial start of the pipeline, containing the sourced data model(s) that should never be changed, it forms your single source of truth to work from. These data models are typically un-typed in most cases e.g. csv, but this will vary from case to case |

Intermediate |

Optional data model(s), which are introduced to type your |

Primary |

Domain specific data model(s) containing cleansed, transformed and wrangled data from either |

Feature |

Analytics specific data model(s) containing a set of features defined against the |

Model input |

Analytics specific data model(s) containing all |

Models |

Stored, serialised pre-trained machine learning models |

Model output |

Analytics specific data model(s) containing the results generated by the model based on the |

Reporting |

Reporting data model(s) that are used to combine a set of |